Updated: Apr 3

Monday, July 22, 2024

This week's edition

Happy Monday all!

When it comes to conversations in the data space, some weeks are hot and some weeks are not.

Last week was certainly hot. My feed was full of amazing posts and conversations!

Picking the top three proved to be a real challenge this week, but here they are.

In this issue, we'll be diving into:

📈 What's hot and what's not: Gartner releases their hype cycle for data management

🤖 The AI hype train continues to slow down

💡 What data teams can learn about from Obama

😄 Your Weekly Dose of Data Hilarity.

I hope you enjoy the issue!

Best regards,

Dave

📈 What's hot and what's not: Gartner releases their hype cycle for data management

Consultant Gregor Zeiler provides a great recap in his post of the major changes in the Gartner Hype Cycle for Data Management this year.

Items that I found most interesting were points made around the data lakehouse architecture and the data mesh framework.

Zeiler notes that, as per Gartner, the data lakehouse architecture will reach a plateau before data lake. This makes sense to me, given the balance that lakehouse strikes between speed, structure, and governance. I would even go a bit further to say that pure play data lakes will be considered obsolete before they reach the plateau.

The other interesting point in the post is that Gartner is declaring data mesh obsolete. While I believe the full vision of data mesh is likely difficult to achieve (even books on the topic use fictitious companies vs real-world examples), I still like aspects of it. I find myself going back to data mesh principals often, but adapting them to the realities of my client's culture and day-to-day operations. So, in short, while it's hard to implement in its purest form, I believe there is a ton of good in the framework. Just pick what works for you and don't feel like it has to be an all or nothing situation.

🤖 The AI hype train continues to slow down

Author and speaker Joe Reis offers up a post with a refreshing message for those that are getting tired of the GenAI hype.

In the post and the accompanying Substack article, Reis expresses frustration with the constant vendor push to sell AI into organisations without any real value present. Reis cautions buyers about previous data hype cycles around the big data and data science movements that left organisation with lots of tooling, but little in terms of value being delivered.

I agree with everything Reis puts forward here and even pointed in this direction in an earlier issue of Data Clarity. Every vendor you come across now has an AI story, but it's up to us as data professionals to get beyond the story, understand the value, and decide whether or not we even need AI to solve the problem at hand.

💡 What data teams can learn about from Obama

The biggest problem I hear when talking to CIOs is that data teams are just not getting things done. They worry about how the business perceives their team and are frustrated at the progress being made. I can understand this, after all, talking about solving a problem is much easier than rolling up our sleeves and solving it, right? 🤪

Vendors will have you believe that if you just pick the right architecture, framework, or technology, you will be able to meet the needs of your organisation. But that's not the case. I've often joked with customers that I could build an enterprise data platform on MS Access. I'm glad nobody has taken me up on the task, but the point is made to illustrate that the tooling selection isn't as important as it seems. So don't over-rotate on that stuff.

Actually meeting the needs of your organisation involves getting down to work and getting things done. It means meeting with stakeholders to understand needs, having tough prioritisation discussions around fixed capacities and commitments, it involves putting egos aside and listening to critical design feedback, shipping to production, and working with stakeholders on change management to ensure adoption is there. This is the hard work, but it's what drives results.

So when I saw this video from Barack Obama, posted by entrepreneur Steven Bartlette, on the importance of getting stuff done, I had to include it in this newsletter.

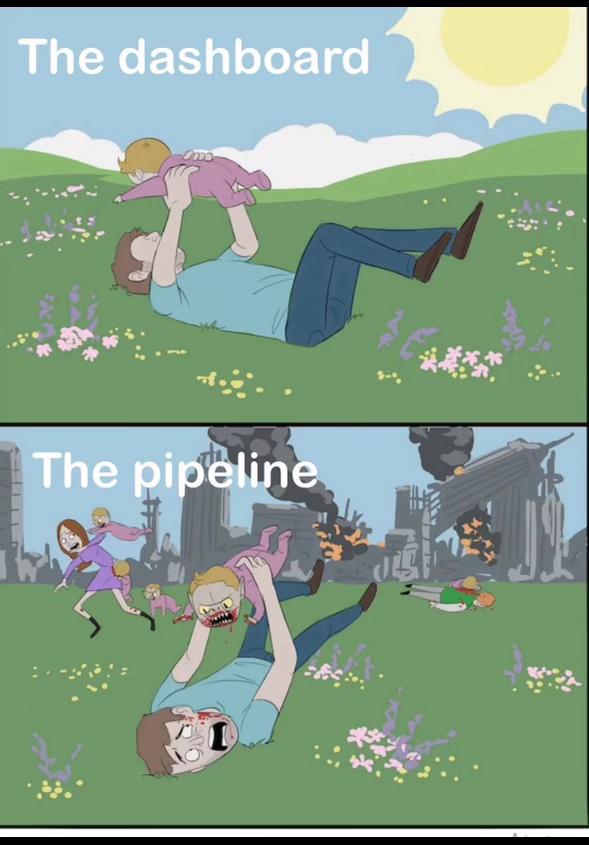

😄 Your Weekly Dose of Data Hilarity

As long as someone is on call to hit refresh!